Two neural networks fighting each other in a zero-sum game. The generative adversarial networks are among the most interesting novelty in the field of machine learning.

A Generative Adversarial Network (GAN), is an artificial intelligence algorithm used in unsupervised machine learning. Its unique feature is the use of two distinct neural networks that challenge each other in a zero-sum game.

Why are adversarial generative networks one of the most interesting innovations of artificial intelligence in the last ten years? In the previous article of this series, we have dealt with the basic concepts of deep learning, explaining how a generic artificial neural network works. The artificial neural networks have made significant progress, they are already able to recognize objects and voices better than humans. Much progress has also been made with natural language.

But if on a hand the recognition is a field of extreme interest, the results are even more surprising if we start talking about the synthesis of images (that is creating images), videos and voices.

Competing networks

The Generative Adversarial Networks are a type of neural network in which research is flourishing. The idea is quite new, introduced by Ian Goodfellow and colleagues at the University of Montreal in 2014. The article, entitled “Generative Adversarial Nets”, illustrates an architecture in which two neural networks were competing in a zero-sum game.

discriminator

The figure above illustrates how GANs operate in a context of face recognition. The discriminator is a convolutional network[1], coupled to a fully connected network. The convolutional part serves to gradually extract the salient features of the faces, while the final part serves to generalize the recognition[2].

Before training the generative adversarial network, the discriminator is initially trained separately, until it can recognize real faces with a satisfactory degree of precision. The final goal of the discriminator will be to distinguish whether a given image is of a real or artificial face, that is to “beat” the generator by spotting the fakes.

Generator

The generator, on the other hand, is a deconvolutional network[3], which takes random noise in input and tries to generate images through interpolation. The final goal is to generate realistic images, able to “cheat” the discriminator, producing faces that are more and more indistinguishable from the real ones.

GAN

Once the generative antagonist network is assembled, the images created by the generator will be the input of the discriminator.

When the discriminator provides its assessment (“real” or “artificial”), it compares it with the images present in the set of tests to determine the correctness of the judgment. If the judgment is wrong (artificial face was mistaken for true), the discriminator will learn from the error and improve the subsequent answers, while if the judgment is correct (artificial face identified as such), the feedback will help the generator to improve the new images.

This process continues until the images produced by the generator and the discriminator’s judgments reach a point of equilibrium. In practice, the two neural networks compete by “training” each other.

The results can be astonishing, as you can see in the video below, where the whole scene, including the horse, is completely transformed in real time.

Applications

GANs have by now applications in almost every field.

Image synthesis

One of the most popular applications of the generative adversarial network is image synthesis. such as generating animal images, for example by turning zebras into horses.

This kind of application (known as style transfer) allows us to completely change the graphics style, for example, recreate a photo with a Van Gogh-like style, or even with other photos’ style!

Text-to-image

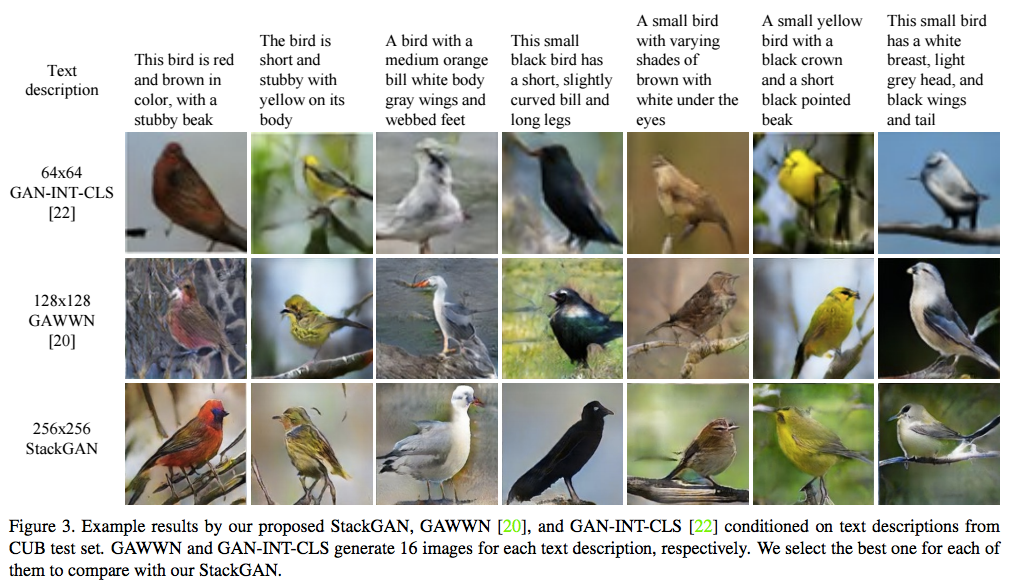

The conversion of text descriptions into images is another field where GANs are producing extremely interesting results, to the point of generating photorealistic images.

Image generation from text descriptions

Vondrick and colleagues proposed an architecture to generate videos modeling foreground and background separately.

Also promising the results of the GANs on the generation of videos with labial synchronization, as shown in the video below produced by Klingemann with Alternative Face.

Restoration

The recovery of the details of videos and images, until yesterday obtained only with manual work, today seems to be headed towards automation.

Below is an example of image denoise.

Below a GAN used to restore a video by eliminating dominant and haze from underwater videos.

Notes

[1] We will deepen the concept of ConvNet in a subsequent article.

[2] The generalization serves to make the recognition more robust to noise such as noise and rotations/translations.

[3] The term “deconvolutional” in this area is strongly debated, and not all agree. For an in-depth discussion of deconvolution in neural networks, we can refer to the excellent article by Wenzhe Shi and colleagues.

LINKS

Ian Goodfellow et al.: Generative Adversarial Nets [arXiv, pdf]

Is the deconvolution layer the same as a convolutional layer?

Could 3D GAN Be the Next Step Forward for Faster 3D Modeling? [3dprinting.com]

Generative Adversarial Networks for Beginners [O’Reilly)

Introductory guide to Generative Adversarial Networks [GANs] and their promise! [Vidhya Analythics]

Carl Vondrick et al: Generating Videos with Scene Dynamics

Deconvolution and Checkerboard Artifacts

Multi-Agent Diverse Generative Adversarial Networks [arXiv]

WaveNet: A Generative Model for Raw Audio [DeepMind]

Google’s Dueling Neural Networks Spar To Get Smarter, No Humans Required [Wired]

Generative Adversarial Networks Using Adaptive Convolution (ILCR, pdf)